Making regular backups of your data is important. I hope no one is trying to debate on that. Of course, some data is more important than other, but given that you want to keep things around, I recommend you to store your data in a good place and then make sure backups are done completely automatic.

Duplicity Backblaze

I do this using an Ansible role, my Nextcloud, duplicity and since a few days a backup cloud provider called Backblaze. The backups themselves are done already for quite a while, but I used Hetzner storage boxes for that until switching.

Backup strategy

Generally speaking everyone should be aware of the 3-2-1-Backup strategy. And while there is some room for customization, it’s never wrong to apply it. The idea is that you have 3 copies of your data, 2 of them on-site on two different mediums and a third one off-site either at a friend, family or cloud provider.

To achieve this I keep, for example, my documents on my local devices. But also use the desktop client to synchronize it to my Nextcloud instance. That’s two copies of the files on different mediums. The actual off-site is the one I’ll talk about in this article that uses duplicity to create a backup of Nextcloud.

It’s important to take into consideration, that given your Nextcloud instance is classified as “on-site”, you should use another provider for your off-site backup. And that’s the reason why I’m switching away from Hetzner storage boxes. They are great and work perfectly fine, but since I move more and more services to Hetzner cloud instances, I don’t want to store my backup there.

Duplicity backs directories by producing encrypted tar-format volumes and uploading them to a remote or local file server. Because duplicity uses librsync, the incremental archives are space efficient and only record the parts of files that have changed since the last backup. . Merge branch 'master' of:duplicity/ duplicity. Kenneth Loafman. Fix issue #26 Backend b2 backblaze fails with nameprefix restrictions. Kenneth Loafman. Fix issue #29 Backend b2 backblaze fails with nameprefix restrictions. Kenneth Loafman.

Two different cloud providers are important, because mistakes happen. From an (accidental) deletion of all my account’s products at a cloud provider, over large scale hardware failures, to not working payment methods. In all cases, if your off-site backup is using anything in common as your “on-site” backup, you’ll are in trouble. So spreading the risk is important here.

But enough of the strategy and theory, let’s get started.

Account creation and setting up the bucket

In order to do server backups with duplicity, Backblaze offers their so-called “B2” storage. It’s basically like Amazon’s S3 storage, just with less proxying and instead one more API request to store data.

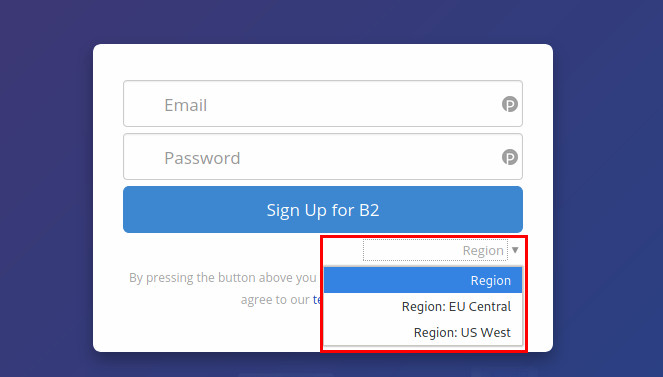

During account creation you select the region where your data backups are stored in. Since I prefer to have my data in the EU, I had explicitly selected the region in the sign-up form. Make sure you do your choice there. It’s easy to miss and I couldn’t find any setting to change it after account creation.

Otherwise, it’s like everywhere. Throw in an email address and a password. Get your 2FA setup, confirm your email address, add billing information and create a private “bucket” to throw in your backup data into it.

Then switch the “App Keys”-dialogue where you can create the access tokens for your buckets, which will be used by duplicity to back up your data.

In the 1st field you enter the name of the key. To keep it simply, I use the same name as for bucket itself, but you can be creative here. On the 2nd field by default “All” is selected. Of course, you shouldn’t allow “machine A” to delete backups for “machine B”. Therefore I highly recommend allowing this access token only for one bucket. Now click on “Create New Key” and your access token will be generated.

The provided keyID and applicationKey can be used to create the b2://-URL that is our future backup target. In order to do this, you put everything together following this schema: b2://<keyID>:<applicationKey>@<bucket name>. In this example it results in b2://XXXXXXXXXXXXXX80000000007:XXXXXXXXXXXXXXXXXXXXXXXXXXX1Vd0@backup-example.

Make sure to store this URL for later steps.

Duplicity on CentOS 7 with B2 backend

Duplicity is a wonderful tool for backups on Linux. Besides just being able to handle all kinds of storage backends from FTP, SMB, and S3 as well as SFTP or B2 storage, it is also integrated with GnuPG in order to encrypt all content of your backups. This is essential as you usually use an “untrusted” storage for off-site backups. While I’m reasonable sure that they take care about data center security and destruction of data and disks, I don’t want to risk anything and therefore encrypting data before sending it, makes things always safe.

Main problem is that in order to use the B2-backend, duplicity requires the python library b2sdk which is not packaged for CentOS 7. I try to avoid installing things using pip, as this either messes with your system installation or never looks spotless, instead I went for a solution that involves containers. As all hosts run moby-engine anyway, why not?

In order to do this, I started to build a container for duplicity. Taking a python base image, adding duplicity and the mentioned b2sdk dependency. Sending everything through CI, off to Quay and here we go.

Bringing it to production with backup_lvm

In order to get the container running in production, I updated my existing backup solution.The backup_lvm Ansible role which runs on a daily basis and before this change, was running duplicity directly installed on CentOS. With a few changes things are now running in a container and more confined than ever before.

All together the backup_lvm role works now like this:

- Create a directory for the backups

- Take a snapshot from all LVM volumes configured

- Mount those snapshots read-only in the directory that was created

- Take a backup of all volumes and push them, once encrypted, to Backblaze

- Unmount all snapshots

- Delete all snapshots

If you don’t want to use my Ansible role, you can still run the container with my settings in an own, minimal playbook:

Just make sure to set the variables and you are ready to go!

Duplicity Backblaze B2

In order to use GnuPG with duplicity, make sure you generate a key and save a copy of both, the private and the public key, in a secure place off your machine in order to allow recovery of your backup when the machine disappears one day.

Update 2020-04-16:If you run on CentOS or alike, you might need to run gpgconf --kill gpg-agent on the host after generating your key and before running the container in order to prevent the container failing due to connecting the local GPG agent, which has a too big version difference.

Conclusion

Backblaze appears to be a viable alternative to my existing backup storage and helps with keeping data available even when the worst case scenario appears and my entire account that hosts my infrastructure would disappear tomorrow.

With duplicity daily backups work out nicely and the containerized version makes it easy to evolve the setup even further. This tutorial should provide a rather detailed insight into how to decide on a good backup strategy, set up your backup storage and run your backups in an automated fashion. I wish, you always have, but never need a restorable backup!

Amazon S3 has been around for more than ten years now and I have been happily using it for offsite backups of my servers for a long time. Backblaze’s cloud backup service has been around for about the same length of time and I have been happily using it for offsite backups of my laptop, also for a long time.

In September 2015, Backblaze launched a new product, B2 Cloud Storage, and while S3 standard pricing is pretty cheap (Glacier is even cheaper) B2 claims to be “the lowest cost high performance cloud storage in the world”. The first 10 GB of storage is free as is the first 1 GB of daily downloads. For my small server backup requirements this sounds perfect.

My backup tool of choice is duplicity, a command line backup tool that supports encrypted archives and a whole load of different storage services, including S3 and B2. It was a simple matter to create a new bucket on B2 and update my backup script to send the data to Backblaze instead of Amazon.

Here is a simple backup script that uses duplicity to keep one month’s worth of backups. In this example we dump a few MySQL databases but it could easily be expanded to back up any other data you wish.

0 4 * * * /root/b2_backup.sh >>/var/log/duplicity/backup.log |